Summary

Mission created a simple workflow to automatically remediate an insecure Glue Dev Endpoint resource. In short, any time a user or Role in a given AWS region makes a Glue Dev Endpoint, it creates a Cloudtrail event which triggers a Lambda function to evaluate the resource for security configurations, then automatically deletes the resource if specific criteria aren’t met. This approach can be used to evaluate and remediate any resource in AWS.

Overview of Steps:

- Create AWS Resource in console with and without appropriate security configurations

- Locate the resource creation event API calls in CloudTrail

- Create EventBridge Filter for the Create Resource Event

- Select acceptable criteria for an appropriately secure resource using AWS Docs, Cloudformation Docs and/or Terraform Docs

- Write a Lambda function that will trigger on the EventBridge Rule, Evaluate the resource, and delete the resource if necessary

The Challenge

Many Mission customers inquire about more proactive security policies that go beyond the guardrails philosophy and take more of an overseer approach. Users, as a general rule, can be quite crafty. Often even the most stringent guardrail policies can be circumvented if given enough time and effort. So what are we to do with existing insecure resources?

A customer belonging to a public sector within the medical industry, recently contracted Mission for a Machine Learning workload. Part of this engagement included securing the new environment resources and deploying a robust security auto-remediation framework for any new resources.

Today, partners in the medical industry are understandably security-conscious given the nature of personally-identifying patient data and HIPAA compliance. So while building out a secure greenfield application is one prerequisite, one must also build mechanisms to:

- Reduce remediation time of non-compliant/insecure resources

- Remove human interaction as much as possible

- Reduce human error

- Cut down on alert fatigue

- Automatically remediate systems impacted by malicious behavior

Introducing Auto-Remediation

One strategy Mission utilized is a combination of AWS EventBridge, CloudTrail, and Lambda to actively monitor every API call from IAM users and roles. Then on a service-by-service basis, built out auto-remediation actions to monitor and remediate insecure resources.

To be clear, building out a comprehensive suite of auto-remediation Lambdas can be time-consuming. Still, it provides an excellent pro-active security framework that can be tailored to your organization and deployed to any account with infrastructure as code.

Let’s take the example of creating a Dev Endpoint resource in the AWS Glue service. You could try to enforce this with an IAM policy, but what if a resource gets created anyways with a root user or Access Keys? We chose the Dev Endpoint example because Glue is a relatively new service. Its resources have many different security configuration options that aren’t necessarily supported by open source governance tools like Cloud Custodian. Not only can you create a Dev Endpoint with Security Configurations and Security Groups, launch them in a particular subnet, and assign public keys, but this specific resource takes a bit of time to become available, so our remediation action needs to consider this.

Additionally, maybe you have existing resources that would not be affected by the said policy? What can you do? Answer: We can create an EventBridge rule to monitor any time a Dev Endpoint resource is created or updated and then trigger a Lambda to evaluate and, if necessary, remediate that resource.

A simple auto-remediation flow works as follows:

- EventBridge Rule monitors the CloudTrail (you can also use custom Config rules)

- Set this rule as a trigger for the Auto-Remediation Lambda Function

- Utilize Boto3 Python library to execute an update or delete API call on the offending resource

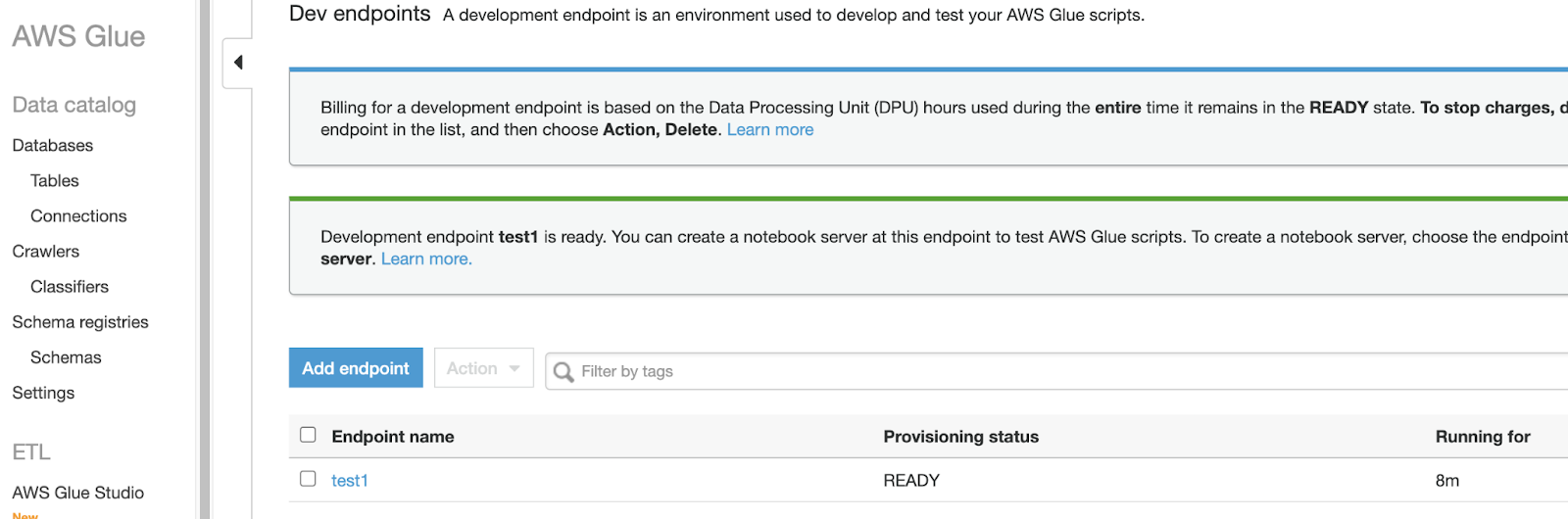

First, let’s make the resource in the console:

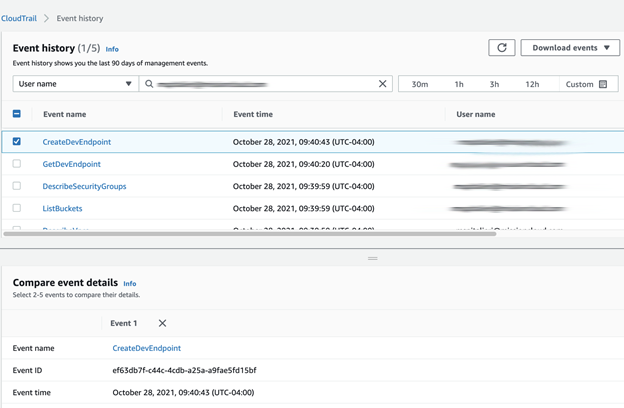

What do those API requests coming in actually look like? To find out, let’s make some changes in our AWS Account, then navigate to our CloudTrail Event history, filtering by our username.

You can click on the event to get the JSON. Here is the part we care about, this has all of the request parameters for the resource created:

"requestParameters": {

"endpointName": "test1",

"numberOfNodes": 5,

"securityConfiguration": "test-secure",

"glueVersion": "1.0",

"roleArn": "arn:aws:iam::123456789:role/glue-ml-test",

"publicKeys": [],

"arguments": {

"--enable-glue-datacatalog": "",

"GLUE_PYTHON_VERSION": "3"

}

},

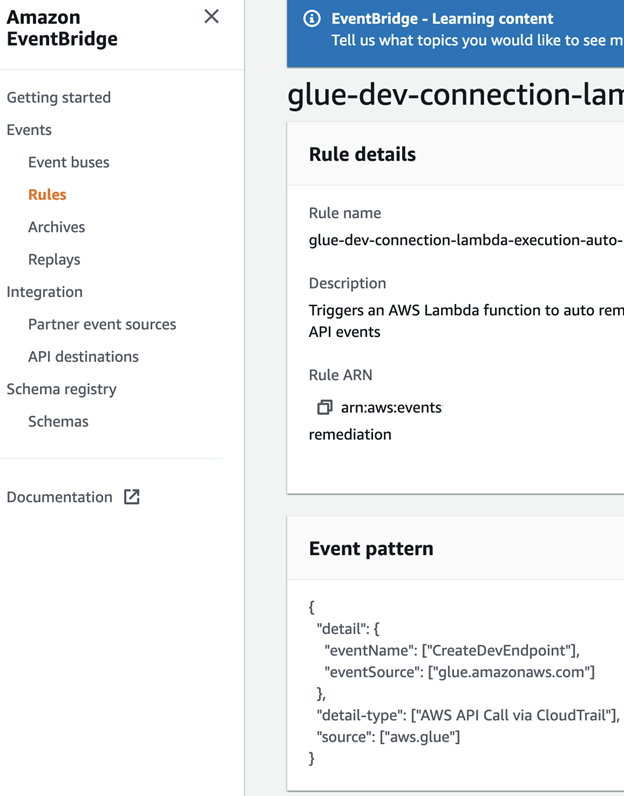

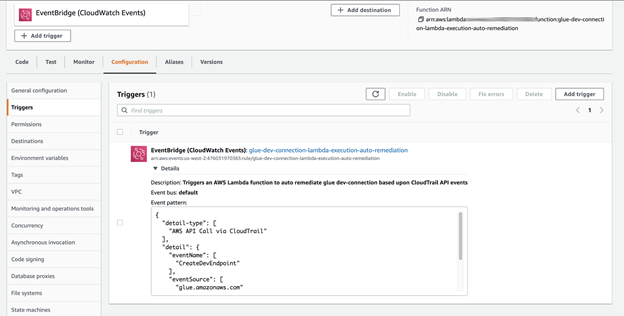

Now, what do we do with this? First, let’s create an EventBridge Rule that will trigger any time an event like this occurs. It will look something like this:

{

"detail-type": [

"AWS API Call via CloudTrail"

],

"detail": {

"eventName": [

"CreateDevEndpoint"

],

"eventSource": [

"glue.amazonaws.com"

]

},

"source": [

"aws.glue"

]

}

Note: We could also add an UpdateDevEndpoint eventName to trigger updated resources, but that will require a bit more logic in our Lambda. Also, it might delete existing resources that get configuration updates and impede development. For now, let’s stick with the CreateDevEndpoint event.

You can set a target here to a Lambda function which, in our case, will evaluate the event and take some action. For now, we can skip the target and simply create the EventBridge Rule.

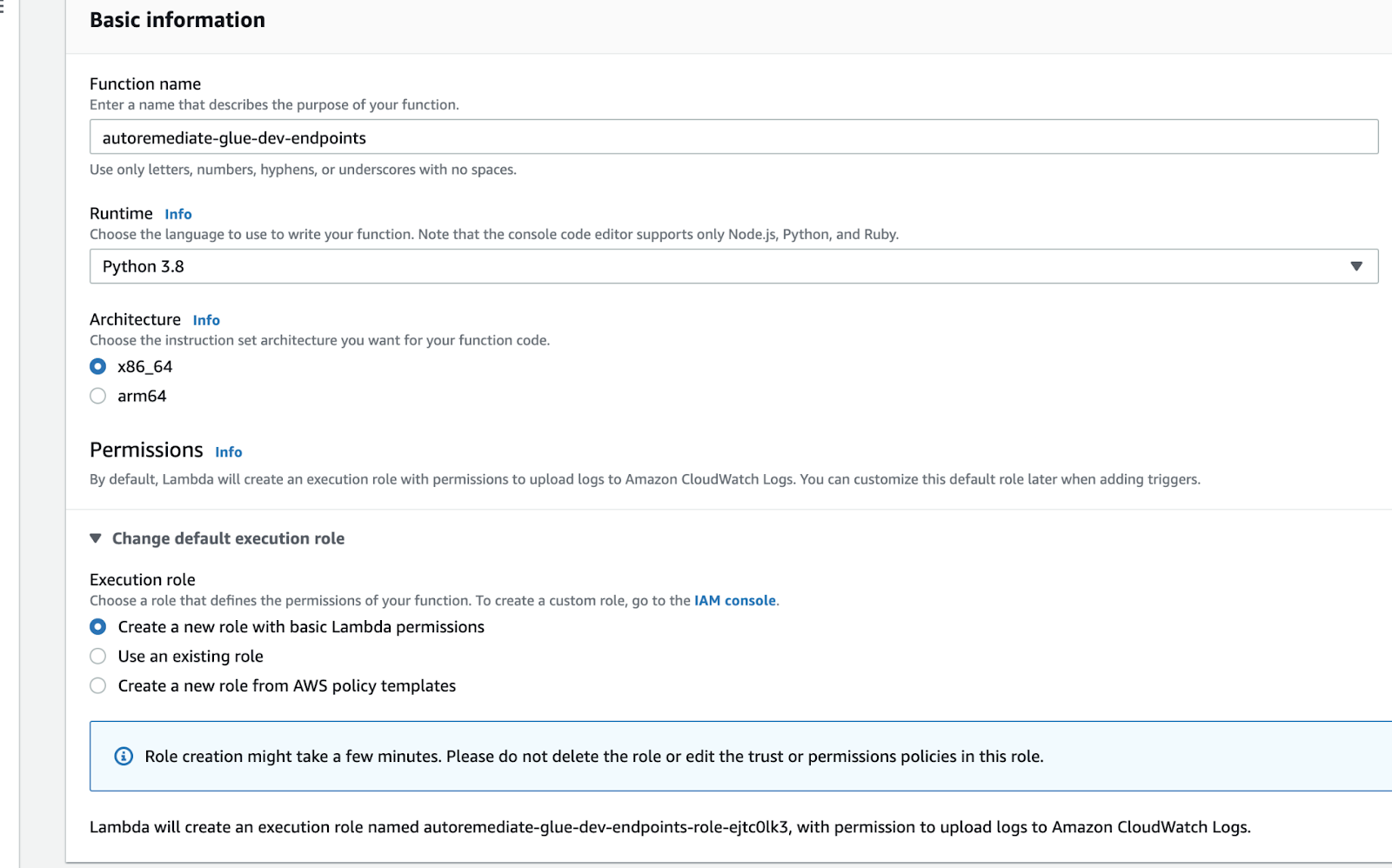

Next, let’s create the Lambda function that will evaluate our rule based on the data from the CloudTrail event. For our purposes, a Python 3.9 Lambda using the Boto3 library will do everything we need. We will need to create the function from scratch along with an IAM Role using the least privileges. We can use the default Role generated when we create our Role, be sure to note the Role name.

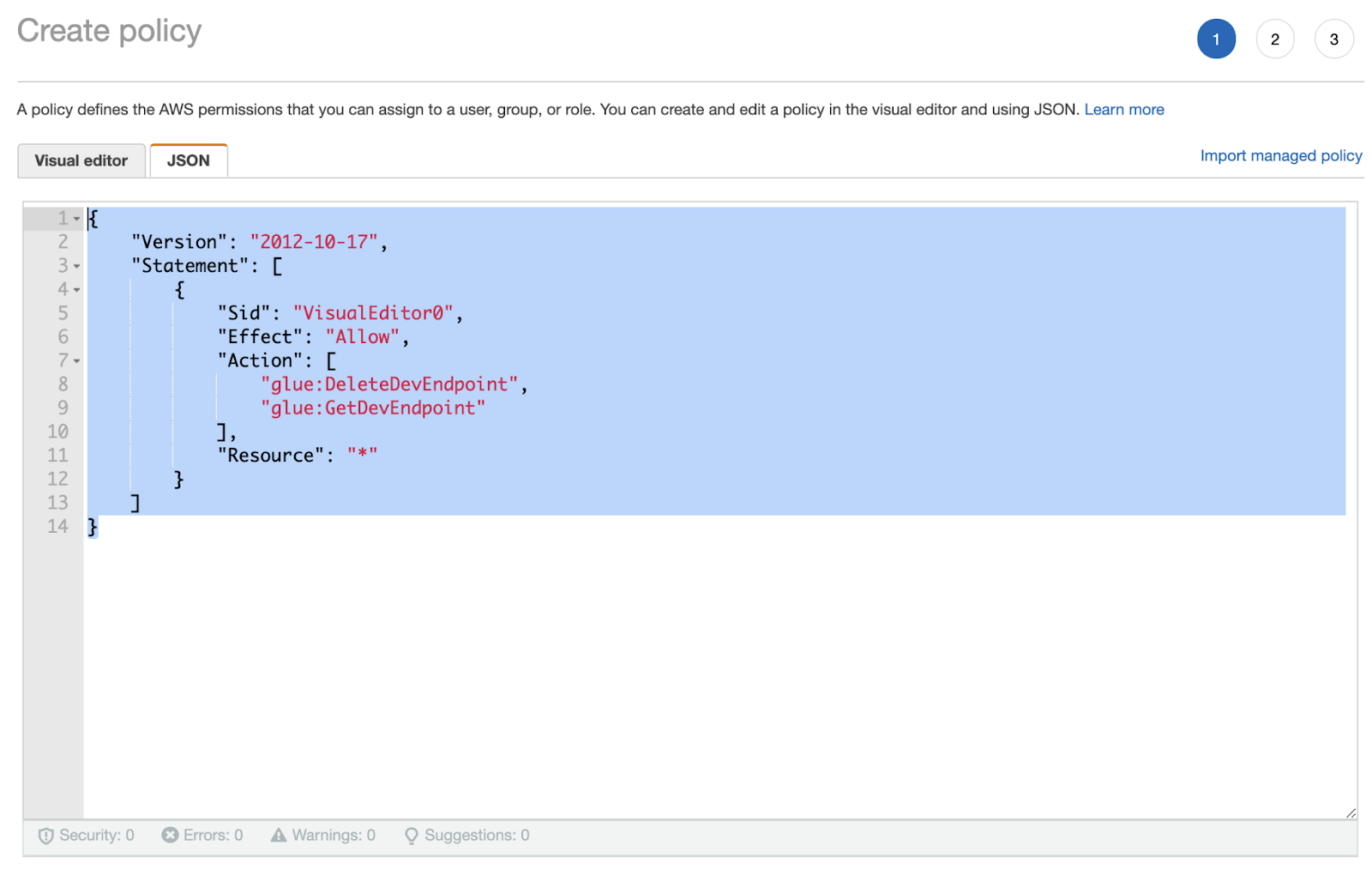

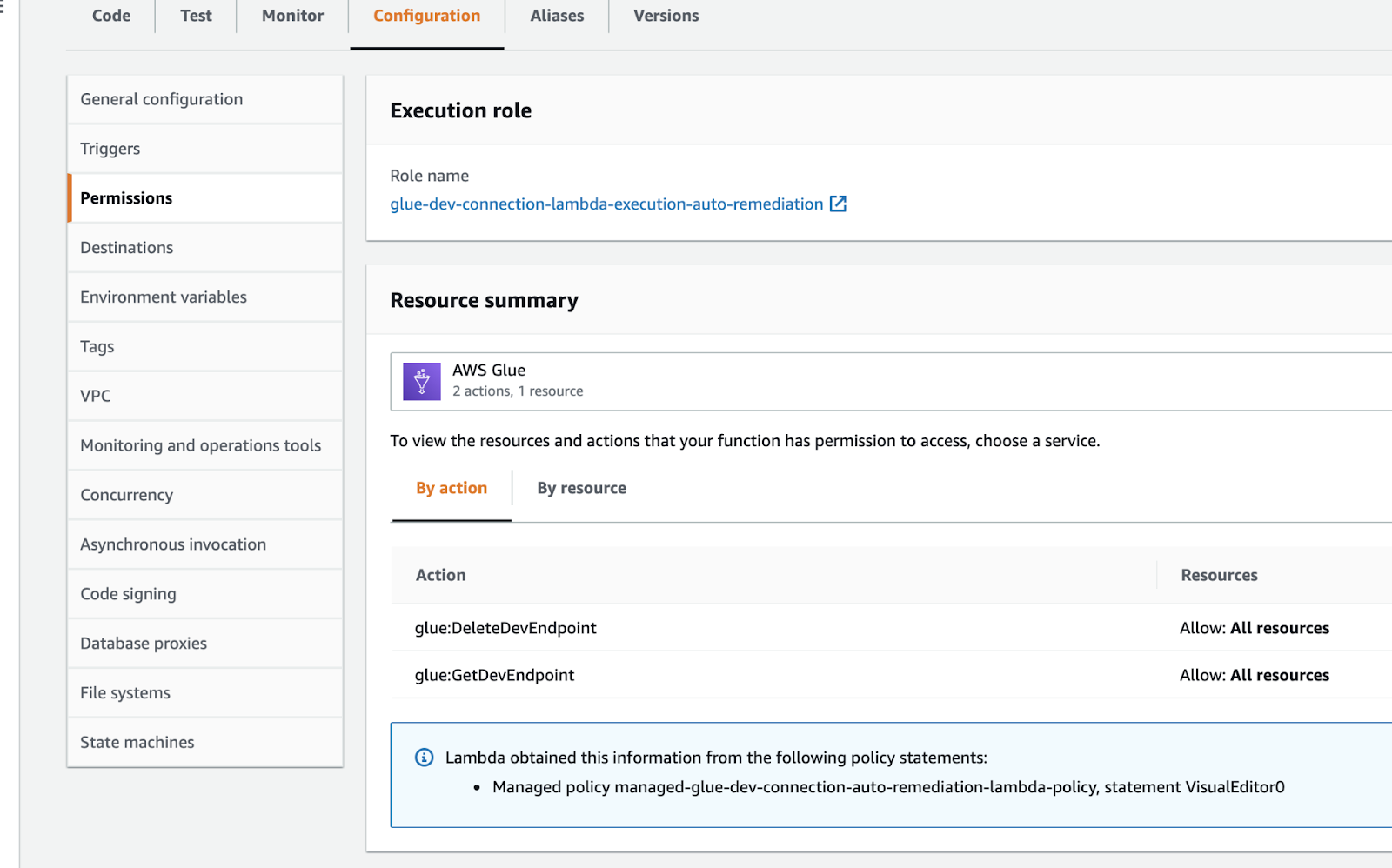

Let’s make the policy for our Lambda Role; this allows the Lambda function to query a Dev Endpoint and delete a Dev Endpoint.

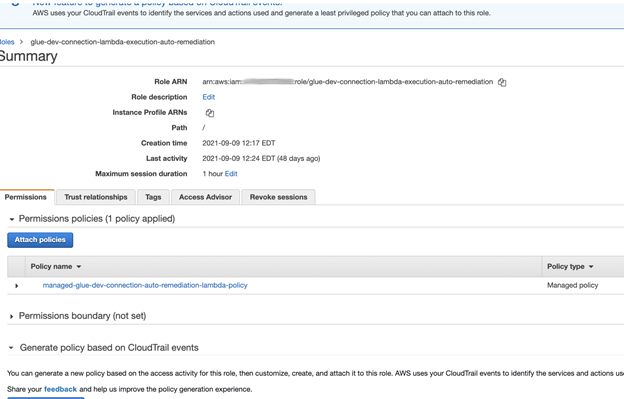

Add this policy to the Role.

So our function role should look like this:

Now, it’s time to write our function. First, we need to import our packages:

import json

import os

import boto3

import time

from botocore.exceptions import ClientError

Next, our Lambda handler should do the following:

- Ingest the event from Cloudtrail

def lambda_handler(event, context):

### fetch new cloudtrail event

print('New Cloudtrail event received:')

print (event)

- Set the boto3 client to execute commands to the appropriate AWS service Note: you can also specify an AWS Region here.

glue_client = boto3.client('glue')

- Parse the event data for the dev endpoint name

endpointName = event['detail']['requestParameters']['endpointName']

print('Endpoint Name: '+ endpointName)

- check for securityConfiguration in event data, if missing delete the endpoint

if "securityConfiguration" in event['detail']['requestParameters']:

securityConfiguration = event['detail']['requestParameters']['securityConfiguration']

print('securityConfiguration: ' + securityConfiguration)

else:

### if no securityConfiguration then delete endpoint

print('securityConfiguration not found...deleting dev endpoint')

deleteDevEndpoint (endpointName)

return

- Check if any securityGroupIds in event data, if missing delete the endpoint

if "securityGroupIds" in event['detail']['requestParameters']:

securityGroupIds = event['detail']['requestParameters']['securityGroupIds']

print('securityGroupIds :' + str(securityGroupIds))

else:

### if no securityConfiguration then delete endpoint

print('securityConfiguration not found...deleting dev endpoint')

deleteDevEndpoint (endpointName)

Return

- Check if any publicKeys in event data, if missing delete the endpoint

if "publicKeys" in event['detail']['requestParameters']:

publicKeys = event['detail']['requestParameters']['publicKeys']

print('publicKeys :' + str(publicKeys))

if publicKeys == []:

print('publicKeys not found...deleting dev endpoint')

deleteDevEndpoint (endpointName)

return

else:

print('Public keys found...')

else:

print('publicKeys not found...deleting dev endpoint')

deleteDevEndpoint (endpointName)

return

- Check if any subnetIds in event data, if missing delete the endpoint

###check for subnets in event data

if "subnetId" in event['detail']['requestParameters']:

subnetId = event['detail']['requestParameters']['subnetId']

print('subnetId :' + str(subnetId))

### if no subnets delete endpoint

else:

print('subnetId not found...deleting dev endpoint')

deleteDevEndpoint (endpointName)

return

At this point we can write our deleteDevEndpoint function.

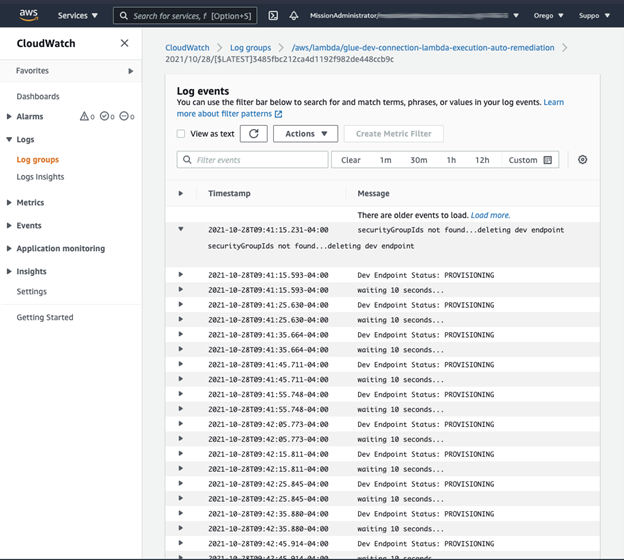

Note: that it can take five to ten minutes for the Dev Endpoint to reach the READY state at which time we can delete the resource. We will need to account for this using a while loop that periodically checks the endpoint’s state status:

###delete endpoint function

def deleteDevEndpoint(endpointName):

glue_client = boto3.client('glue')

endpointStatus = ''

###while loop that waits until the new endpoint is in ready state and can be deleted without error

while endpointStatus != 'READY':

response = glue_client.get_dev_endpoint(EndpointName=endpointName)

endpointStatus = response['DevEndpoint']['Status']

print('Dev Endpoint Status: ' + endpointStatus)

print ('waiting 10 seconds...')

time.sleep(10)

print('Dev Endpoint is ' + endpointStatus)

print('attempting to delete endpoint...')

###once the endpoint is ready, attempt to delete

try:

response = glue_client.delete_dev_endpoint(EndpointName=endpointName)

except ClientError as exception_obj:

print(exception_obj)

print(response)

return response

Time to put it all together:

import json

import os

import boto3

import time

from botocore.exceptions import ClientError

glue_client = boto3.client('glue')

def lambda_handler(event, context):

glue_client = boto3.client('glue')

### fetch new cloudtrail event

print('New Cloudtrail event received:')

print (event)

##fetch endpoint name

endpointName = event['detail']['requestParameters']['endpointName']

print('Endpoint Name: '+ endpointName)

##check for securityConfiguration in event data

if "securityConfiguration" in event['detail']['requestParameters']:

securityConfiguration = event['detail']['requestParameters']['securityConfiguration']

print('securityConfiguration: ' + securityConfiguration)

else:

### if no securityConfiguration then delete endpoint

print('securityConfiguration not found...deleting dev endpoint')

deleteDevEndpoint (endpointName)

return

## check if any securityGroupIds in event data

if "securityGroupIds" in event['detail']['requestParameters']:

securityGroupIds = event['detail']['requestParameters']['securityGroupIds']

print('securityGroupIds :' + str(securityGroupIds))

##if no SGs found delete endpoint

else:

print('securityGroupIds not found...deleting dev endpoint')

deleteDevEndpoint (endpointName)

return

##check for public keys in event data

if "publicKeys" in event['detail']['requestParameters']:

publicKeys = event['detail']['requestParameters']['publicKeys']

print('publicKeys :' + str(publicKeys))

##if no public keys delete endpoint

if publicKeys == []:

print('publicKeys not found...deleting dev endpoint')

deleteDevEndpoint (endpointName)

return

else:

print('Public keys found...')

else:

print('publicKeys not found...deleting dev endpoint')

deleteDevEndpoint (endpointName)

return

###check for subnets in event data

if "subnetId" in event['detail']['requestParameters']:

subnetId = event['detail']['requestParameters']['subnetId']

print('subnetId :' + str(subnetId))

### if no subnets delete endpoint

else:

print('subnetId not found...deleting dev endpoint')

deleteDevEndpoint (endpointName)

return

print('All security requirements satisfied...exiting...')

return

###delete endpoint function

def deleteDevEndpoint(endpointName):

glue_client = boto3.client('glue')

endpointStatus = ''

###while loop that waits until the new endpoint is in ready state and can be deleted without error

while endpointStatus != 'READY':

response = glue_client.get_dev_endpoint(EndpointName=endpointName)

endpointStatus = response['DevEndpoint']['Status']

print('Dev Endpoint Status: ' + endpointStatus)

print ('waiting 10 seconds...')

time.sleep(10)

print('Dev Endpoint is ' + endpointStatus)

print('attempting to delete endpoint...')

###once the endpoint is ready, attempt to delete

try:

response = glue_client.delete_dev_endpoint(EndpointName=endpointName)

except ClientError as exception_obj:

print(exception_obj)

print(response)

return response

Now let’s set up our trigger in the lambda function to invoke on our Eventbridge rule. It will look something like this in the lambda function configuration menu. Make sure your trigger is set to enabled.

Next, we make an insecure resource in Glue and watch our Lambda logs to see what our function does with the resource:

Looks like it works! Finally, you’ll want to do extensive testing with all your secure and insecure resource permutations to ensure your function is working correctly.

Optional: You may also want to trigger an SES/SNS alert to notify IT staff that a resource was deleted. You could pass in the endpointName variable into the body of your email and send it to a distribution list. Details of what that function would look like are on this page.

Ongoing Maintenance

Obviously, like all AWS Services and resources, these methods and API calls are always subject to change, so there will be some overhead in maintaining these Lambdas should the boto3 methods or the format of the CloudTrail JSON changes.

You will want to set up an ongoing monitoring system to track logs. An example could be to set up CloudWatch filters to check for delete events, generate reports, populate a DynamDB table, and track user deletions. As previously stated, the workflow is limited only by your creativity.