Data science and machine learning applications are becoming more popular and widespread. To manage their pipelines, many organizations are turning to tools and services like Amazon SageMaker. With a plethora of available machine learning platforms, what is SageMaker, and why is it a good choice for MLOps?

SageMaker helps teams build, train and deploy machine learning models more easily, whether they’re experienced data scientists or professionals who rely on no-code tools. With its built-in integration with DevOps tools, the platform enables continuous delivery of machine learning applications while also streamlining the development process for MLOps teams.

Learn more about how Amazon SageMaker and MLOps work together and how you can leverage the platform for your projects.

Overview of SageMaker

Amazon SageMaker is an automated platform and comprehensive suite of tools that simplifies the development, training and deployment of machine learning (ML) models. It reduces the complexity of model development by providing a web-based interface for creating ML pipelines and pre-built algorithms.

Many users start by working in SageMaker Studio, the service’s web-based integrated development environment (IDE). To help you get started, SageMaker comes with several preloaded algorithms and solution templates for supervised and unsupervised learning alike. The platform also provides access to popular deep learning frameworks like TensorFlow, MXNet and PyTorch.

After you’ve built models, you can keep track of them over time with SageMaker’s Model Monitor feature. This allows for continuous monitoring and reporting with a real-time endpoint, a recurring batch transform job or a schedule for asynchronous batch transform jobs. The Model Monitor feature integrates with Amazon SageMaker Clarify to detect any bias that emerges.

SageMaker’s other integrations include DevOps tools such as Jenkins and AWS CodePipeline. CodePipeline is a fully managed continuous delivery service that speeds up and automates much of deployment without compromising on quality standards set by the organization.

SageMaker also offers automatic model tuning (AMT), also called hyperparameter tuning, which enables MLOps teams to efficiently find the best version of their models. AMT uses the specified algorithm and hyperparameter ranges to run multiple training jobs on the dataset to determine the best performing combination.

Another key feature of SageMaker is its ability to scale ML models quickly and efficiently. The platform can handle large amounts of data and provides the necessary infrastructure for training and deploying models at scale. This makes it ideal for organizations that need to process large amounts of data quickly, such as financial institutions or healthcare providers.

In addition to technical services, there's a range of resources and support available to help MLOps teams get the most out of the platform. This includes SageMaker best practices, documentation, tutorials, online forums, Amazon’s support team and certified third-party providers.

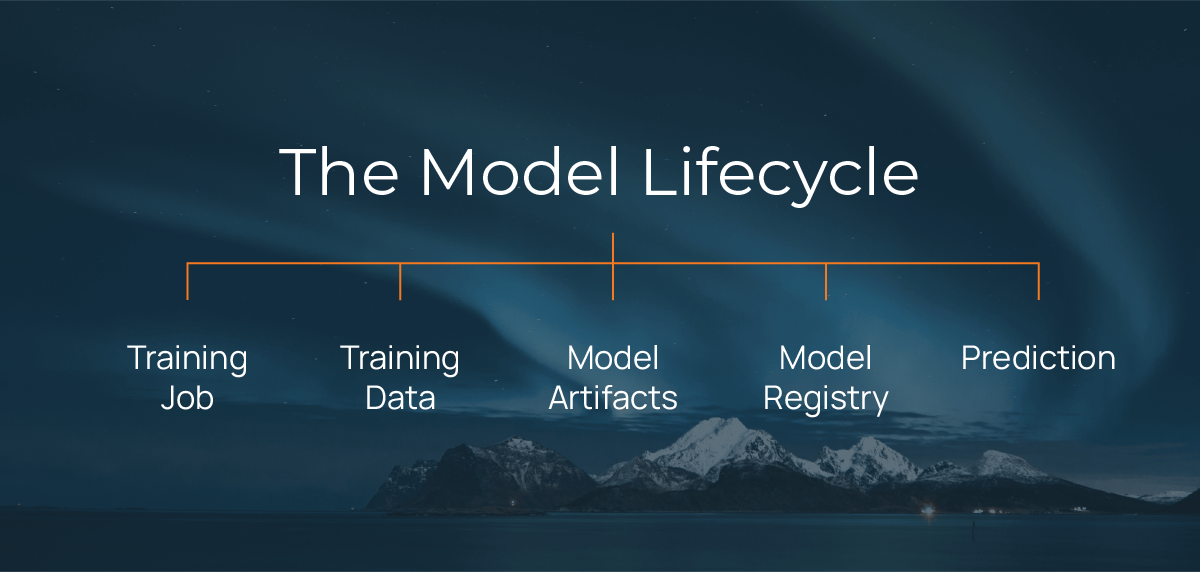

The Model Lifecycle

The model lifecycle is a critical aspect of any machine learning project and is often one of the biggest challenges for MLOps teams to manage. Here’s an overview of the model lifecycle.

Training Job

Submitting a training job to SageMaker is a simple and streamlined process. You’ll need to specify the ML algorithm, hyperparameters and input data location. Users can also customize their training job by selecting specific Sagemaker instance types and adding software libraries.

The platform provides pre-built algorithms for common use cases, such as image classification and natural language processing.

Training Data

The quality and quantity of training data are critical factors that affect the accuracy and effectiveness of ML models. SageMaker provides tools for preprocessing and transforming data before it’s used for training, including data cleansing and feature engineering, which help to improve model accuracy and efficiency.

Model Artifacts

After a training job is completed, the trained model artifacts are stored in S3. These artifacts include model weights, metadata, training parameters and evaluation metrics. SageMaker supports MLOps teams managing these artifacts with features such as versioning and change tracking. This enables users to quickly and easily deploy new versions of the model without losing access to previous versions.

Model Registry

SageMaker Model Registry is an optional but recommended service for packaging model artifacts with deployment information. That information includes your deployment code, what type of container to use and what type of instance to deploy. The Model Registry decreases time to deployment, as you already have the necessary information about how the model should be deployed.

Prediction

After you’ve trained and deployed your model in SageMaker, you can use it to generate predictions based on new data. There are two main ways to do this.

- Endpoint deployment: Deploy your model to an endpoint, allowing users and applications to send API requests to get model predictions in real time.

- Batch predictions: Generate predictions on large amounts of data without needing an immediate response..

Benefits of SageMaker for MLOps Teams

Amazon SageMaker helps MLOps teams build, train and deploy models in less time and with minimal effort and maximum efficiency. Here’s what some of those benefits look like.

Integrations with DevOps Tools

The platform integrates with popular DevOps tools, like AWS CodePipe and Jenkins, through the SageMaker console, APIs or other methods. This allows businesses to create continuous integration and continuous deployment (CI/CD) pipelines for continuous delivery of ML models.

These integrations provide a streamlined, standard process for packaging and deploying ML models, helping your MLOps team produce models faster and with fewer errors. Additionally, Amazon SageMaker Pipelines helps ML scientists create end-to-end workflows at scale for a variety of applications.

SageMaker also provides powerful auto-scaling capabilities to ensure that models are always running optimally. This means the platform can dynamically adjust infrastructure for models in response to changing workloads and demand. No manual adjustments are needed.

Scalability

The scalability of Amazon SageMaker projects allows MLOps teams to deploy their models at scale without additional configuration or manual intervention. This accelerates the deployment process.

Auto-scaling capabilities automatically scale models up or down depending on usage needs. This ensures that resources are used efficiently while also keeping models available when needed most.

Powerful Monitoring Features

SageMaker helps MLOps teams to track model performance in real time. For example, Model Monitor uses continuous and on-schedule monitoring to track changes in data quality, model quality, prediction bias and feature attribution.

MLOps teams can receive alerts when specific performance thresholds are exceeded or anomalies are detected. SageMake provides detailed reports and visualizations to analyze model performance and identify areas for improvement.

Using SageMaker for MLOps

Learn about four of Amazon SageMaker’s unique features that MLOps teams are using to augment their ML efforts.

SageMaker Studio

SageMaker Studio is an IDE for building and training ML models. It provides a unified, web-based interface to manage your data, code and models in a single place. SageMaker Studio also provides easy scalability and reliable security.

SageMaker Studio is designed for team-based work. Team members can easily collaborate by sharing notebooks. In SageMaker, a notebook is a web-based interface that allows data scientists and developers to create and share documents that contain code, visualizations and narrative text. They provide a secure and collaborative environment for ML projects, allowing users to easily track changes while keeping their data and code organized.

SageMaker Pipelines

SageMaker Pipelines is a powerful tool for building, deploying and managing end-to-end ML workflows. Users can create automated workflows that cover data preparation, model training and deployment — all from a single interface. Because Pipeline logs everything, you can easily track and re-create models.

Pipelines is designed for ongoing use, with Amazon-provided templates plus the ability to store and reuse workflow steps as needed.

SageMaker Debugger

Training errors can cost ML teams valuable time, especially if they aren’t sure where the error is occurring. SageMaker Debugger solves this problem with real-time, detailed monitoring of the training process. This offering can analyze training data, track model accuracy and diagnose model performance issues. Debugger supports common ML frameworks and integrates with AWS Lambda.

When Debugger spots an issue with training, it sends automatic, configurable alerts. It also comes with built-in analytics and the ability to create custom conditions and reports.

SageMaker Model Monitor

Model Monitor helps your team continuously monitor ML models for accuracy and drift. Like SageMaker Debugger, you can set alerts that are automatically triggered when problems are detected, such as drift in model quality. With Model Monitor, users can track model performance, catch issues early and ensure that changes to the model don’t adversely affect performance.

Model Monitor generates reports based on the monitoring schedules you set up, whether that’s ongoing real-time endpoint or batch transform jobs or asynchronous batch transform jobs.

Continuing Your SageMaker Journey

Amazon SageMaker is a robust offering with solid documentation from Amazon on getting started and optimizing your efforts. Combining MLOps with Sagemaker improves your organization’s speed, efficiency and effectiveness at deploying useful ML models at scale.

As you get started with powerful ML tools like these, remember that SageMaker is aimed at a range of expertise, including business analysts up through ML engineers. Answering the question “What is SageMaker?” yields different answers depending on your team’s makeup and ML experience. By working with a knowledgeable partner like Mission Cloud, you can diagnose your needs, assess your in-house capabilities and create a plan for realizing the platform’s full potential.

Contact us today to schedule a complimentary 1-hour session with a Mission Solutions Architect to discuss your machine learning questions. They can help you navigate the complexities of SageMaker and provide personalized recommendations for your specific use case.

FAQ

- How does Amazon SageMaker ensure the security of machine learning models and data throughout the ML lifecycle?

Amazon SageMaker incorporates AWS's robust security features to protect machine learning models and data. These features include encryption in transit and at rest, identity and access management, and network isolation capabilities to ensure that ML workloads are secure throughout their lifecycle.

- Can SageMaker be integrated with third-party tools and services, and if so, which are commonly used alongside it?

SageMaker can integrate with various third-party tools and services, enhancing its functionality and flexibility. This includes integration with popular development, monitoring, and CI/CD tools, allowing teams to incorporate SageMaker seamlessly into their existing MLOps workflows.

- What are the initial setup costs and ongoing expenses associated with using Amazon SageMaker for machine learning projects?

The costs associated with using Amazon SageMaker for machine learning projects can vary based on usage, including data processing, model training, and deployment. AWS provides a pay-as-you-go pricing model, enabling users to scale their use of SageMaker services according to their project needs and budget constraints.